Publication bias is when publishing or not publishing is not random, and is instead conditional on the results. The common bias is to find significance when there is none, although on occasions there is bias against some unpopular result. It can seriously distort our understanding of the world. We do empirical studies of the world in order to estimate some parameter which we can apply in a context outside of just that one study. Taking an average, or a weighted average, of effect size would be an intuitive way to estimate how the world is, and publication bias denies us this. Such studies are called meta-analyses, and particularly in the medical world, are used

It would be helpful to illustrate how publication bias affects results, sometimes in unexpected ways. Cohen and Ganong have a very recent meta-analysis out on the effects of unemployment insurance on unemployment. Theory would suggest that increasing unemployment insurance increases unemployment. This is not because people prefer the unemployment insurance to working, necessarily — at least, that would be the wrong way of thinking about it. People have some minimum set of wages and conditions which they are willing to work for, and continue randomly searching through jobs until they find a job exceeding that. In theory, providing unemployment insurance could be welfare enhancing, if people are risk-averse or better job fits have positive externalities.

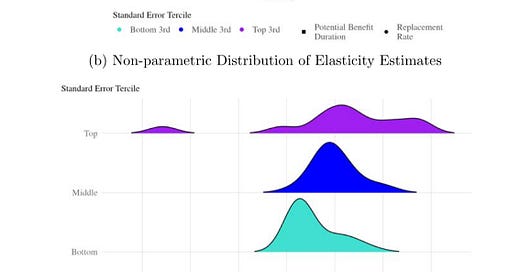

That is beside the point of the paper, which is narrower. They find that journals have been biased toward publishing papers with a substantial effect. Additional scrutiny is given to null results with large standard errors, so they are censored from publication. Results in the top and middle terciles of standard error size find larger estimates those in the bottom, as you can see here

.

Correcting this cuts the estimates of the effect of unemployment insurance on unemployment by a half. It would seem that unemployment insurance doesn’t create as much unemployment as we previously thought.

We would like the literature At the same time, it’s not clear that we should do anything about it. People have limited time, and studies have costs. If the point of learning is to cause us to take better actions, then we want to learn about the results which will induce the biggest change in action, given our priors. This is like the formulation of unbiased news in Armona, Gentzkow, Kamenica, and Shapiro (2024). The news should not report a random sample of “facts about the world”. Besides the difficulty of defining what all the possible facts are, most of them would be useless; so useless, in fact, we’d scarcely think of them as news. The continued existence of the star Vega is a fact, but not a very useful one. That there will be severe storms is an extremely useful fact to know. Likewise, if there is some claim we all believe to be true, what is the point of confirming it ad nauseam? We should, from this point of view, be most interested in untested or contestable theoretically.

In addition, meta-analyses have conceptual issues. It is not always clear that they are actually measuring the same thing, which accounts for their preponderance in the medical field. In medicine, you might be testing the effect of a particular drug on a disease. You have to be careful that the population and dosing regimen remain the same across studies, but you can quite reasonably aggregate multiple studies the same thing. Suppose, by contrast, you are in economics and want to measure the effect of an additional year of education on later life outcomes. Why should we expect the marginal effect of education to be linear with respect to dose? The additional year matters. The population being treated matters. You can’t simply aggregate their effect sizes and go. Even if you are considering the same dose, it might vary across contexts, and the precision of studies could be in some way correlated with the times.

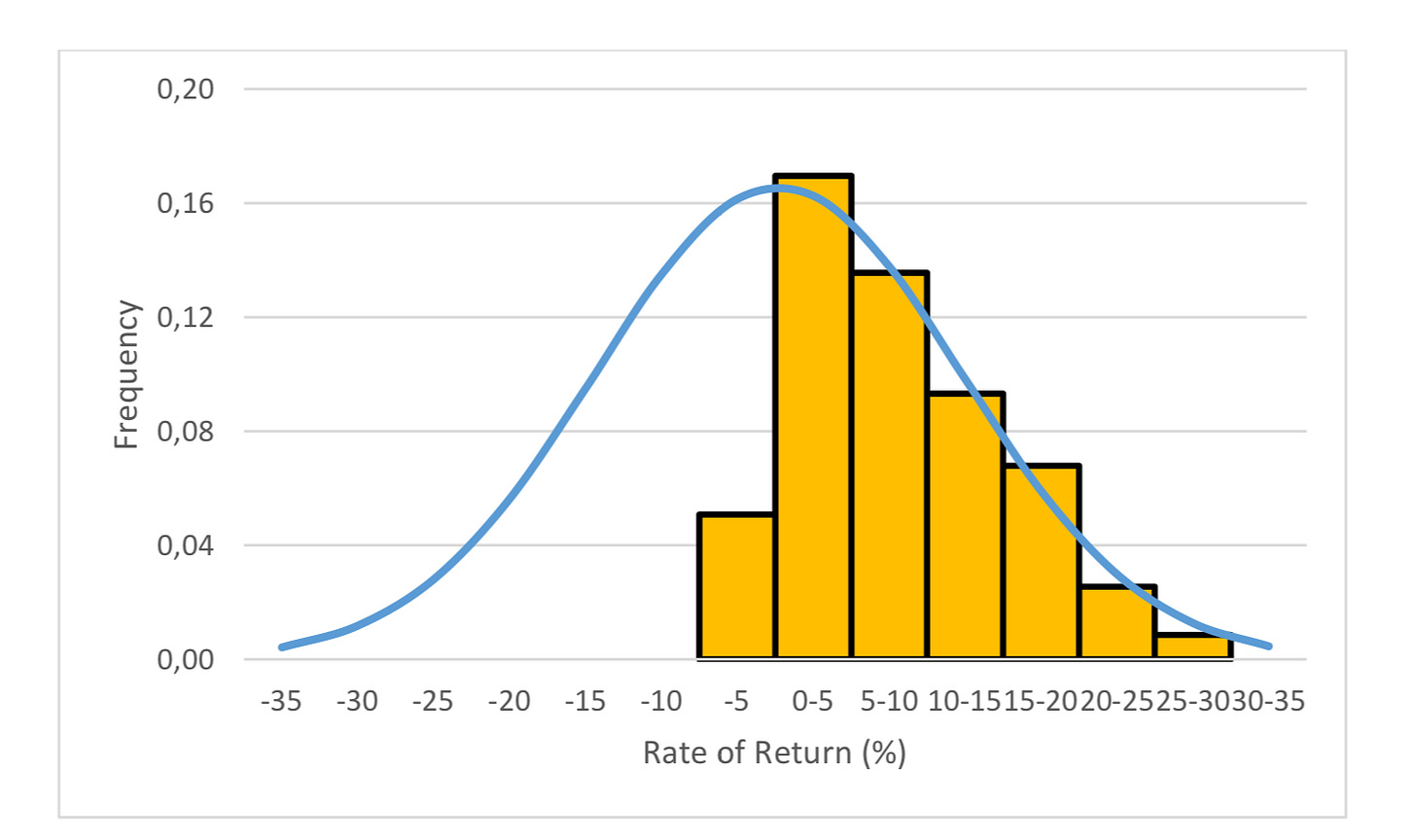

Finally, we can’t be sure we’re actually correcting for publication bias with many methods. Take education. Clark and Nielsen have a recent working paper on publication bias in the returns to education literature, which shows the same positive correlation between effect size and standard errors, and also shows how the distribution of returns to education looks like half a normal distribution, abruptly chopped off at 0

.

It is strongly suggestive evidence, but not dispositive. There isn’t a theoretical reason why the rate of return couldn’t be negative, because a negative return simply means that you were worse off compared to what else you would have done in that time. Nevertheless, there are plausible reasons why it would look like this. Choosing to implement school is not a random decision. We are not doing it at random, but have some idea of what would be good or not good. Imagine the government is only expanding school if they have some reason to believe it would make people better off. If they are reasonably accurate, then returns from school would be overwhelmingly positive.

In my heart, I believe both of the meta-analyses are directionally correct. But we must be more cautious, and less sure! The central limit theorem is often true, even when the assumptions aren’t, but we needn’t assume everything forms a normal distribution.